Optimization has become one of my favorite topics. OK, I’ll admit it: it might be an obsession and a source of joy. My inability to optimize (queries, data models, and DAX) used to be a source of headaches and the occasional heart attack depending on what I was trying to accomplish. I make no claim that I’m an optimization expert, but I’ve spent hours wondering and researching why a data model’s calculation time could go from a couple of seconds to many minutes. This is a rare occurrence, but when it has happened, it’s happened when I’ve been on the verge of something great. Of course, if you’re taking business intelligence to its edge, you’re not adding 2+2—you’re trying to give someone an answer they (and you) thought was impossible. A recalculation that takes many minutes (especially if you’re not the end-user) is unacceptable and may cause your data model to crash—fatal exceptions anyone?

This article focuses on an optimization technique that I couldn’t find anywhere. Before I get into that technique, I’d like to touch on what I consider to be the basic tenets of optimization when working with data modeling and DAX.

Basic Tenets of Optimization

Cardinality, filtering, and VAR

There is some great information on each of these topics, so I won’t go into too much detail, but it’s essential that each of these is used in conjunction with each other. I’d also submit that building a solution that focuses on cardinality, filtering, and VAR in that order is helpful. The reason for this order is that cardinality applies to build an efficient data model; filtering is one often one of the first tools used in DAX authoring; and, VAR is a refinement of DAX. One could argue that cardinality also applies to DAX (e.g., iterator functions), or that filtering applies heavily to query methodology, which it does. Some may be able to pull VARs out of a hat, but I can’t—I often need to write a convoluted DAX formula to see the VAR potential and then rewrite it.

Cardinality

Put simply, the more unique choices in a column, the greater the cardinality. For example, if there is a “yes/no” attribute, the cardinality of that column is low. If there is a unique transaction number for each of 100 million rows, cardinality is high. The basic optimization principle I focus on when building a data model is that, if I don’t need a column—especially if creates high cardinality—I don’t even bring it into the data model. If I do need it, I should really need it (i.e., there is no other solution).

Filtering

I’m reminded almost every day of the importance of filtering. It sounds pedestrian, but it is far from it. Filtering is an art, and it takes time to develop an eye for it. It can be performed as part of your query or part of your DAX.

VAR

Marco Russo introduced me to variables at a conference session when they first came out. It took me a while for the application of variables to sink in, but when it did…wow. If you’re referencing the same scalar value, function, or table in a DAX formula, you might be able to use VAR. In a nutshell, whenever you write the name of a function or table multiple times, the DAX mechanism makes an equal number of “calls” to your data. VAR simplifies to potentially one call, which can have a huge impact.

DAX Optimization Mantra: Write It Like the DAX Calls It

As I eluded to in the intro, I ran into an issue where a data model went from a refresh of seconds to many minutes (like 20). There was a lot going on in this refresh: database connections, multiple fact tables, 10+ dimensions, and lots of hearty DAX. I’ll save the gory details, but the decrease in performance came down to ONE DAX formula. That formula was the result of a beautiful, iterated string of DAX, just like I had been taught to do. But, it made my solution very cranky and here’s why…

The ”call” this bottleneck formula made on the data set was more than met the eye. When a measure is created that is, for example, a measure plus a measure plus a measure, the data call is effectively re-running each of those measures individually. In a previous post, I presented an approach to creating an income statement model. There were some relatively straightforward DAX in that data model, like calculating net income actuals, which will be the measure I use as an example. Here it is, a harmless looking DAX measure for net income actuals:

NI Act :=

[GP Act] – [OI&E Act] – [D&A Act] – [II&E Act] – [Taxes Act]

That said, if you analyze the measure above in DAX Studio, this is the call the measure is making on the data set:

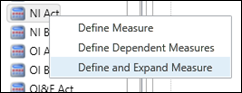

To get this information, I connected DAX Studio to my data model, then selected the measure, right clicked on it and selected “Define and Expand Measure.”

Lots of CALCULATE and lots of one repetitive filter, Scenarios[ScenarioName]=”Actual.” An optimized formula would reduce, in this case, the number of CALCULATES and the number of repetitive filters. In general, you’ll want to reduce the number of anything repetitious. At first glance, one would think that VAR would be a good candidate, but with all the filter context changes, VAR doesn’t work. It’s been explained to me why, but unfortunately, my memory is that “it just doesn’t work.” Instead, I first opted to create another “base” measure that filters GL amount on actuals:

GL Amt (Correct Signs) Act :=

CALCULATE (

SUMX ( Accounts, [SUM GL Amt] * Accounts[Report Sign] ),

Scenarios[ScenarioName] = “Actual”

)

I then used this measure, to calculate my newly optimized net income actuals measure:

NI Act Optimized :=

CALCULATE ( [GL Amt (Correct Signs) Act], Headers[Header] = “Revenue” )

– CALCULATE (

[GL Amt (Correct Signs) Act],

Headers[Header] = “Cost of Sales”

|| Headers[Header] = “Other Income & Expense”

|| Headers[Header] = “Depreciation & Amortization”

|| Headers[Header] = “Interest Income/Expense”

|| Headers[Header] = “Taxes”

)

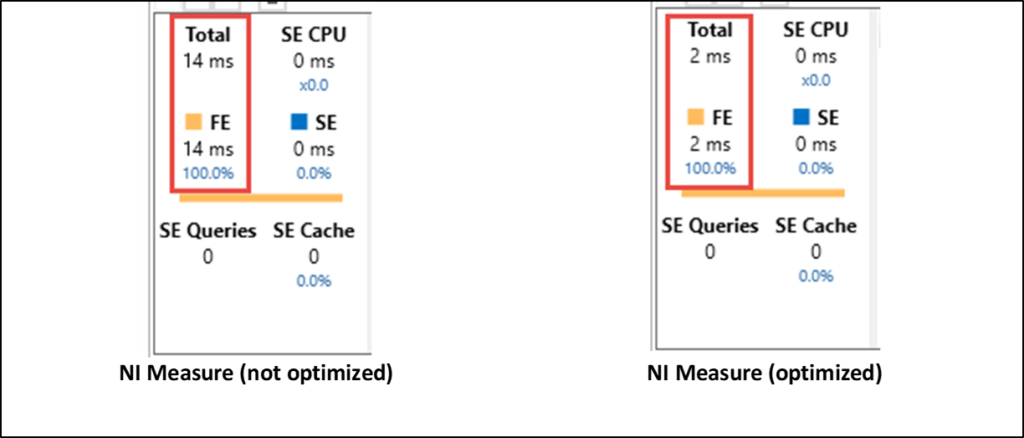

As you can see, instead of multiple formulas whose purpose is to adjust the filter context over and over, I’ve reduced it to one. When I rerun the formula in DAX Studio, this is the result:

Not bad, I’d say! Most of the CALCULATES have gone away as have much of the repetitive filtering. The performance improvement in terms of noticeable time isn’t that much because I wanted to give an easily-understood example, but the percentage reduction in time is astounding, from 14ms to 2ms or an 86% reduction in processing speed.

VIDEO: Optimizing & Analyzing DAX Query Plans

FILTER vs. CALCULATETABLE: optimization using cardinality estimation

Where It’s At: The Intersection of Biz, Human, and Tech*

We “give away” business-value-creating and escape-the-box-inspiring content like this article in part to show you that we’re not your average “tools” consulting firm. We’re sharp on the toolset for sure, but also on what makes businesses AND human beings “go.”

In three days’ time imagine what we can do for your bottom line. You should seriously consider finding out 🙂

* – unless, of course, you have two turntables and a microphone. We hear a lot of things are also located there.